Proven impact, measurable results

150

+ 150

Robots deployed in production

< 2 ms

Real-time 6DoF pose updates

CAD only

Setup, ready in minutes

Why industrial vision was failing

Robots don’t fail because “AI is not good enough.”

They fail because vision models are trained and built for clean, predictable, repeatable environments, not for real factories.

Real factories mean:

- Harsh lighting, reflections, shadows

- Vibrations and unstable fixtures

- Moving lines with unpredictable speed

- Hard shiny parts with low texture

- Cycle times that tolerate no delay

Every other vision model breaks here. Ours doesn’t.

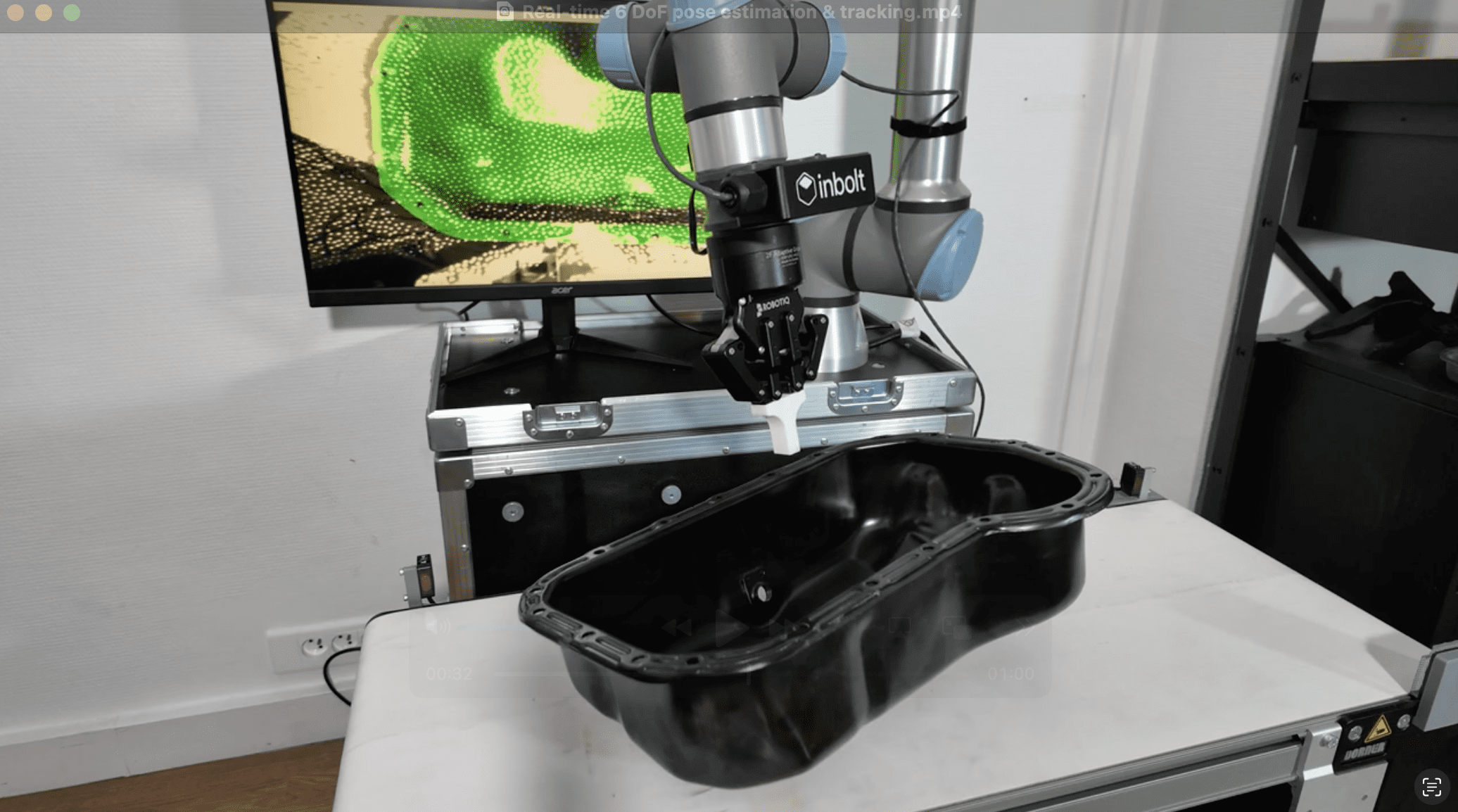

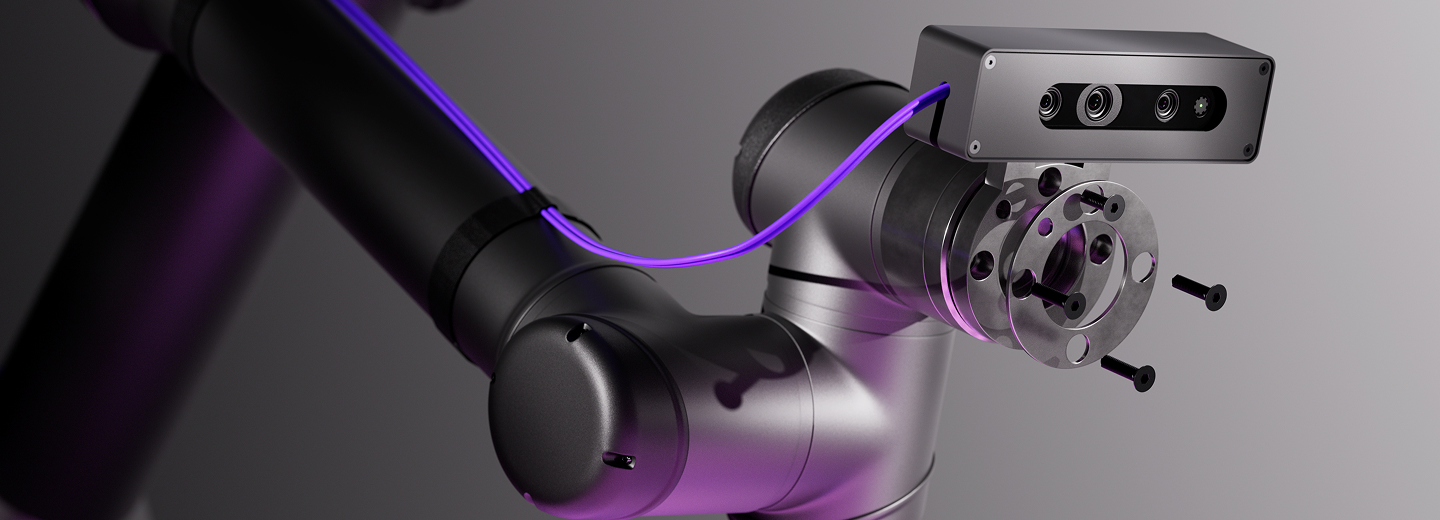

Core vision model capabilities

Real-time 6DoF tracking, sub-millimeter accuracy, CAD-based setup, and robustness built for real factory conditions.

Real-time (<2 ms)

The model updates fast enough to enable dynamic decision-making and continuous robot trajectory correction.

No buffering. No batching. No timeout spikes.

Dynamic tracking on moving lines

Adapts to changing speeds, jumps, vibration, rotation, and misaligned parts. With robot drivers running up to 500 Hz, the model delivers real-time 6DoF tracking that finally makes continuous moving-line automation achievable.

Sub-millimeter Accuracy

Validated across diverse factory environments, the model delivers sub-millimeter accuracy for fastening, assembly, loading and unloading tasks.

CAD-based pipeline

Upload your CAD, the model fine-tunes on-device, and you’re ready in minutes. No datasets, no labeling. A pipeline built to scale effortlessly across hundreds of stations.

Multi-object understanding

Detects and tracks multiple parts in cluttered or semi-ordered scenes, making it ideal for bin picking, random presentation, and high-variation workflows.

Robustness to factory variability

The model handles lighting changes, shadows, reflections, specular surfaces, occlusions, vibration, and shifting backgrounds. Built to stay reliable in real factory conditions.

Why Real-Time Matters

Real-time robot pose estimation & tracking is the key to unlock mass automation.

Automation without limits

Real-time tracking makes moving lines, flexible flows, and semi-structured stations finally automatable.

Higher station reliability

Robots remain accurate even as parts shift, lighting changes, or stations wear over time.

Latency that drives speed

Ultra-low latency means faster robot moves, tighter cycles, and higher overall output.

Precision when it matters

Robots stay precise in dynamic or unstable conditions thanks to millisecond-level tracking.

Real-time robot control layer

Real-time perception only matters if the robot can respond instantly. Inbolt’s custom control drivers convert high-frequency pose updates into smooth, deterministic motion, closing the loop between vision and action.

Our drivers:

-

Stream trajectory corrections up to 500 Hz depending on robot brand

-

Maintain stable motion under vibration and varying line speeds

-

Ensure deterministic, repeatable cycle times

This is the only real-time perception-to-control stack deployed at scale in factories today.

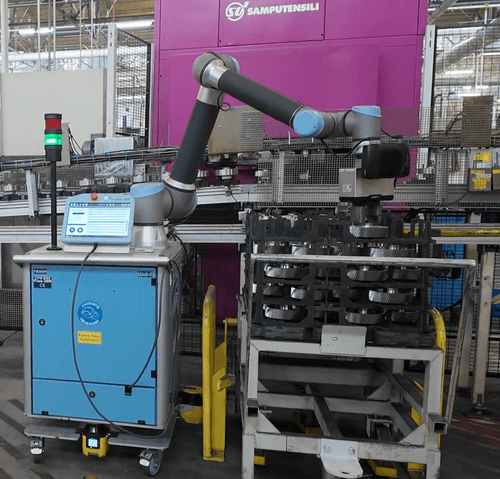

Proven at scale

150

+ 150

Robots deployed across top automotive OEMs

2

2 M

Hours of continuous operation

99.99%

Detection reliability across real industrial conditions

Explore more from Inbolt

Access articles, use cases, and resources to see how Inbolt drives intelligent automation worldwide.

NVIDIA & UR join forces with Inbolt for intelligent automation

Reliable 3D Tracking in Any Lighting Condition

KUKA robots just got eyes: Inbolt integration is here

Explore what’s possible

Want to explore how the Inbolt Vision Model can fit your robots, products, or production lines? Contact us to discuss your needs or join the waitlist for early access.