The Rise of Autonomous Industrial Robotics: From Structured to Unstructured Environments

We have heard a lot about structured, semistructured and unstructured Data. However, one trend currently on the rise is to start working in semistructured and unstructured environments within manufacturing industries, which are significantly more difficult to automate in the manufacturing industry.

From Structured to Unstructured

In robotics, we usually categorize robotic environments into three types: structured, semi-structured, and unstructured.

Structured environments are defined spaces (cells) where the robot moves along a predetermined path. These environments often use mechanical jigs and cages to ensure maximum robot accessibility and efficiency, as well as repeatability in terms of the position of the workpiece.

This environment can be referred to as a “constrained” one. However, any environmental alteration can lead to robot collisions and non-quality.

Semi-structured environments are mostly constrained, but they can accept some kind of unknown variation, like the position of the workpiece. Intelligence is programmed into the robot to help it react to these changes, but its flexibility is limited due to its perception capabilities.

Unstructured environments. Most natural environments are unstructured. They can be cluttered and filled with obstacles. Navigating these environments requires real-time perception and decision-making, the same way our eyes and brains work.

From Planned to Unplanned

Similarly, we can categorize events around us into two types:

Planned events, where the robot’s response to a situation is already programmed in advance.

Unplanned events, when something unexpected happens, like someone entering the robot’s workspace or a random workpiece appearing, the robot needs to be able to perceive it in real-time and adjust its movements accordingly.

Less Structured Environments will be at the Heart of Tomorrow’s Manufacturing Industries

Until recently, most robots that were developed were pre-programmed industrial robots designed for repetitive tasks in structured environments. The purpose of these robots was to automate tasks that would otherwise be performed by humans.

But the future calls for robots to do more than just run automated processes in the background.

These new robots won’t replace human jobs but enhance them by taking on tasks that can be automated, freeing up time for more human-centered activities (we discussed this phenomenon in our newsletter).

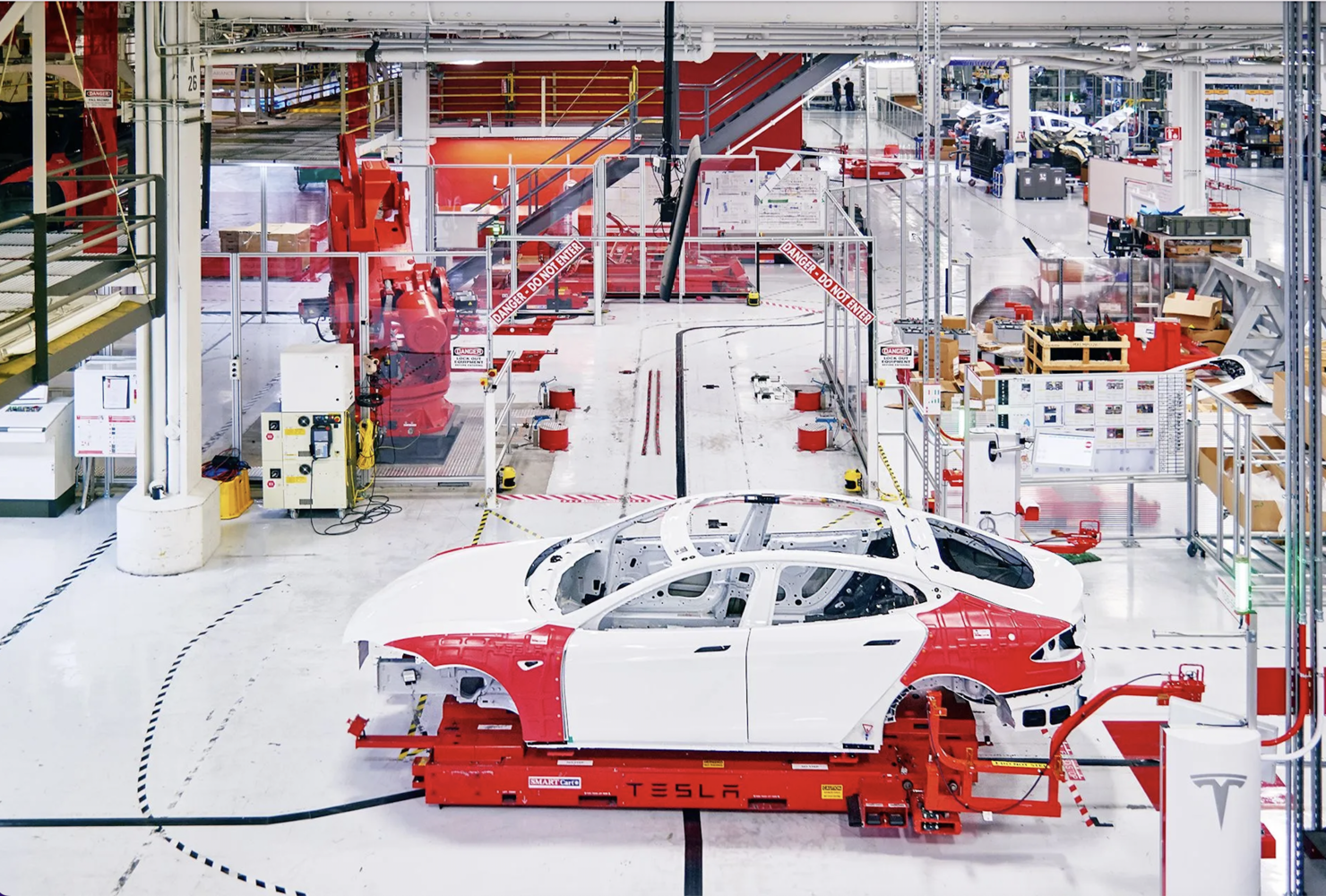

These robots will be able to operate in semi-structured and cluttered environments, and have the ability to continuously learn and adapt to unexpected situations. Some might even be able to operate in fully unstructured environments, though we aren’t quite there yet. Tesla is one of the most notable companies that use flexible assembly lines.

We’re seeing a paradigm shift in industrial robotics, just like we did with autonomous driving.

Autonomous Industrial Robotics is on the Rise

This shift is powered by better and more affordable sensors, better computing power, and a change in the mentality of industrials. Indeed, small and medium enterprises (SMBs) are increasingly willing to automate their manufacturing processes, which remain very little automated today. Such companies have, due to the high share of labor, very unstructured & unplanned production facilities. Making industrial robots autonomous will enable flexible and low-cost automation for such industrials. On the other hand, large OEMs are also pressured by the need to reduce production costs and are driving the adoption of autonomous industrial robotics.

This paradigm shift will have several impacts on the industry, as it will:

- Eliminate the need for jigs and indexing systems to increase manufacturing flexibility, making it easy to launch a new product reference. This has been a hot topic for years but has really started to take off with the increased need for personalisation we are seeing.

- Reduce automation costs by decreasing infrastructure and minimising the impact of the cell.

- Reduce commissioning time for the cells, which will no longer require millimetre trajectory fine-tuning, thus eliminating the need to plan everything.

- Improve safety and process quality.

Autonomous Industrial Robotics Driven by Real-time Environment Perception & Decision-making

Vision sensors allow robots to see the environment or the workpiece they are trained to work on.

2D vision sensors are electronic imaging devices that capture and process two-dimensional images.

They provide better accuracy and repeatability; higher resolution; faster, more reliable processing, and better scalability and flexibility. They also provide data in real-time, allowing for better decision-making and greater efficiency. 2D vision sensors are used to automate tasks like quality control and inspection, robotic guidance, or human-machine interaction. They are a cost-effective and reliable solution, but they have their limits.

They cannot detect depth or measure the size of objects and cannot recognise objects that are hidden from sight or occluded. Their accuracy is affected by lighting conditions, object shape, and object location. So it happens that some objects that are far away or difficult to detect won’t be detected at all. 2D vision sensors also struggle with textured surfaces, which can make them less reliable.

3D vision sensors are more robust and offer more advantages.

There are a variety of technologies that are used to apply vision sensors in industrial robotics.

Some of the more common technologies used include stereo vision, structured light, and time-of-flight cameras. Stereo vision typically uses two cameras to produce a 3D image, while structured light involves projecting a pattern onto the object being scanned and then analyzing the deformation of the pattern. Time-of-flight cameras, on the other hand, use infrared light to measure the distance to the object being scanned.

Whatever the chosen method chosen, these technologies allow for the generation of 3D point clouds, which can vary in terms of their density and accuracy, as mentioned in our article.

Inbrain, today’s fastest point cloud processing AI will power such revolution

At Inbolt, we developed the world’s fastest point cloud processing AI: Inbrain.

This state-of-the-art AI technology processes massive amounts of 3D data at an incredibly high frequency, making robot control in real-time possible thanks to real-time feedback from 3D vision sensors.

Inbrain is hardware agnostic and can process data from all types of 3D technology, enabling a robust and reactive robot guidance, even in unstructured and unplanned environments.

Such high-frequency robot control makes it possible to have robust, reactive & safe robot guidance in unstructured and unplanned environments.

GuideNOW, a real-time robot guidance system, is our first product powered by Inbrain.

Explore more from Inbolt

Access similar articles, use cases, and resources to see how Inbolt drives intelligent automation worldwide.

Why the future of automation is being written by the automotive industry

Reliable 3D Tracking in Any Lighting Condition

The Circular Factory - How Physical AI Is Enabling Sustainable Manufacturing

NVIDIA & UR join forces with Inbolt for intelligent automation

KUKA robots just got eyes: Inbolt integration is here

Albane Dersy named one of “10 women shaping the future of robotics in 2025”

Want to Sound Smart About Vision‑Guidance for Robots?